Why knowing the geography of your motherboard matters when it comes to optimizing graphics and animation.

If you’ve ever attempted a casual conversation with a graphics engineer, they've probably worked the word “shader” in somewhere. If pressed (and even if not pressed), they’ll explain that shaders are modern animation code of some sort. They’re fast because of some fancy trigonometry they do, or because of some very math-y chips on your graphics card. And while they’re not wrong exactly, they’re burying the lede. Shaders aren’t fast because of how they work so much as where. The geography matters. To see why, just take a look inside a Commodore 64.

It's easier than it sounds. If you find yourself near one, I recommend the following: project confidence, grab a Phillips screwdriver from somewhere, and remove the three screws along the bottom-front edge of the case. It will pop open for you like the hood of a Buick.

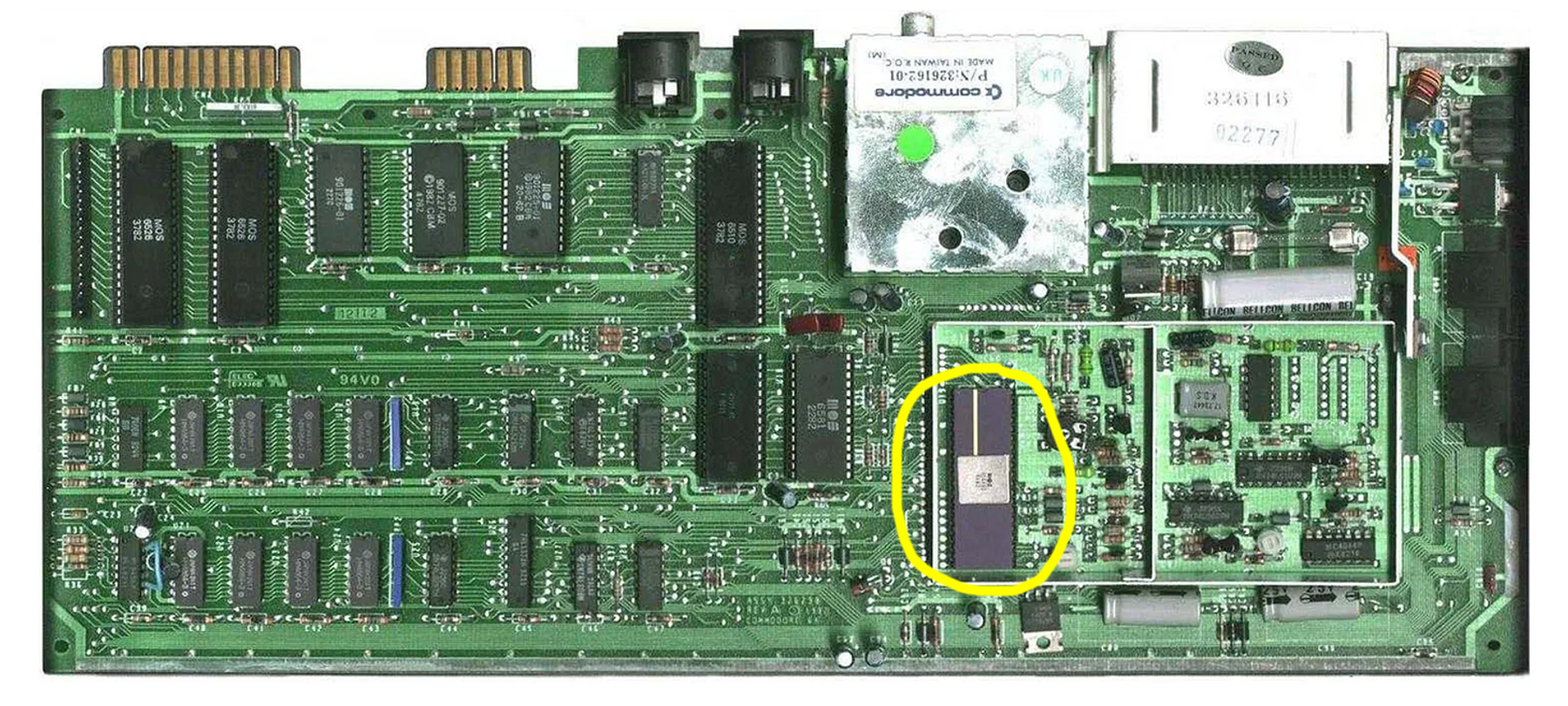

Inside, you’ll find a verdant green field peppered with orderly black chips, connected by mostly horizontal lines. This is the motherboard, and for all the hype a computer’s processor gets, it’s just one unremarkable chip among many. If you didn’t know where to look, you might guess the CPU is the big one with the gold stripe and the giant heat sink, and you’d be completely wrong. You’ve found the GPU! The CPU is the similarly-sized black bug to the northwest, a variation of the venerable 6502.

The Commodore's graphics chip was called the VIC-II, or Video Interface Chip. It shared its memory with the CPU (the memory is the field of small chips in the southwest), and handled outputting a video signal to your television. By “shared memory," I mean both chips literally connect to all the same memory chips via those horizontal lines, and then take turns accessing them. If the CPU wanted to tell the graphics chip something, it left a message for it in RAM, like a postcard.

It’s easy to scoff at the graphical capabilities of these machines, whose entire visual universe is smaller than a standard application’s icon today. The Commodore 64 could drive up to 320x200 pixels on a standard TV, which sounds quaint until you do the math and realize that’s still 64,000 individual pixels, 60 times per second. I mean, I can’t do that. Knowing the CPU runs at 1Mhz (meaning it can perform 1 million instructions per second), that’s 1,000,000 / (64,000 * 60). That’s only 1/4 of a clock cycle per pixel. Even if updating the display was the only thing the CPU was doing, it’s still not even close to having the capability. It needs to delegate.

The Commodore 64 could drive up to 320x200 pixels on a standard TV, which sounds quaint until you do the math and realize that’s still 64,000 individual pixels, 60 times per second.

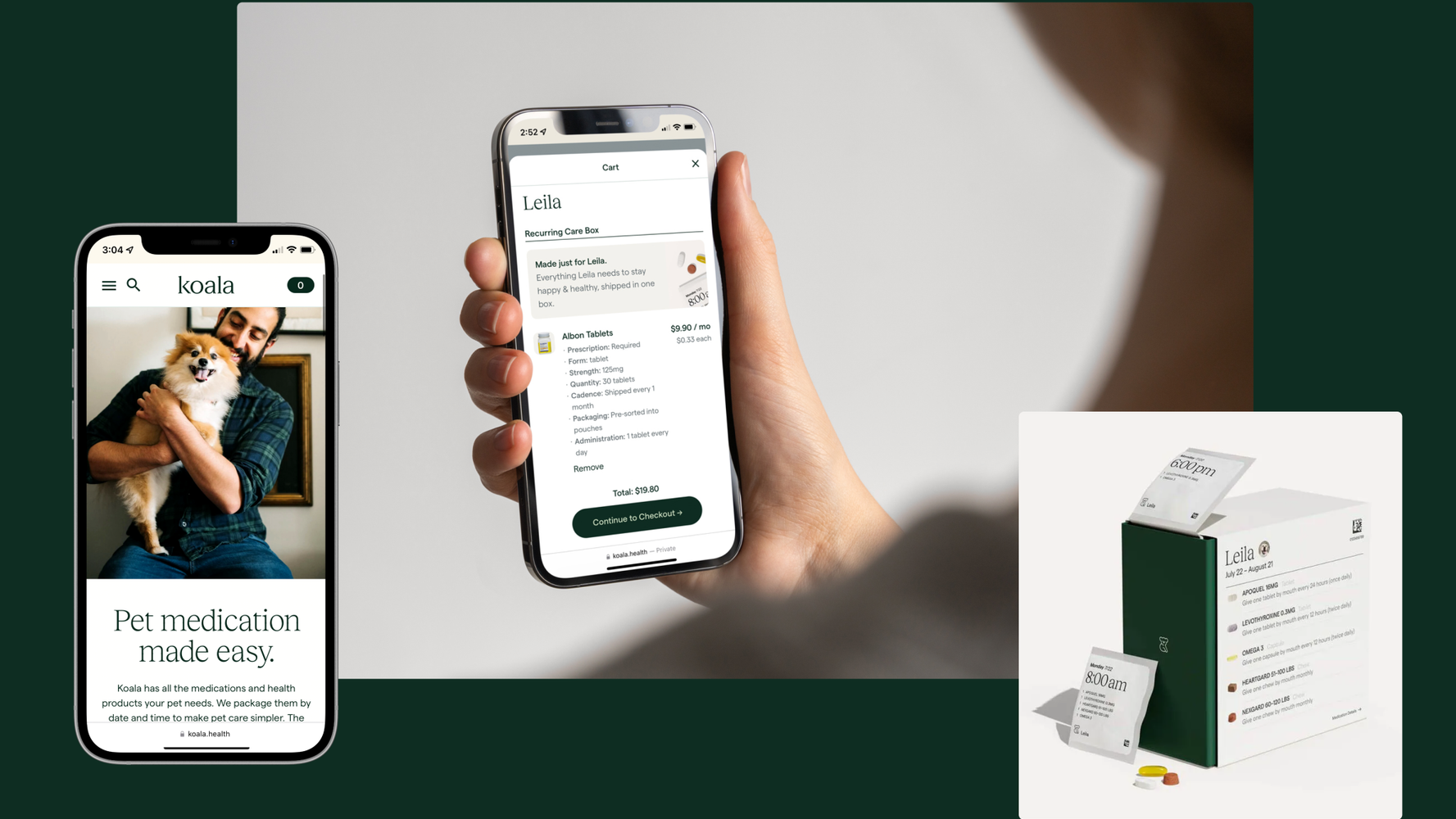

This all may seem academic, and hilariously outdated and irrelevant to modern GPU pipelines. But the physical geography of these structures is largely unchanged, even today. New computers are basically just faster old computers, and graphics are still outsourced within the machine to some other place the CPU doesn’t directly control. I've found that knowing this geography helps me make better decisions about optimizing graphics and animation. (A few recent examples of this knowledge being useful can be seen on the homepages of our sites for HOF Capital and Kendall Square.)

So. Today we have GPUs that are entire galaxies compared to the neighborhood of the C64, with hundreds or thousands of processor cores and gigabytes of their own dedicated onboard memory.

Overkill? I mean, certainly. But screens these days are more in the 3840x2160 range, so now you’re talking ~8.3 million pixels, each with at least 3 bytes of color data, drawn at least 60 times per second. Yeeeesh. It’s hard to do! A single raw frame on a 4k screen is about 24 megabytes. Even modern CPUs would be crippled constantly updating these displays on their own. They still delegate in much the same way the NES and C64 did.

Some Examples

Let’s look at two examples of particle systems, one driven by your CPU, and one by your GPU. They look the same, but they're built differently. Here’s the CPU one.

See the Pen DOM Element Particles by Upstatement (@Upstatement) on CodePen.

This is about as simple as it gets: each particle is a <div> that's moved about with JavaScript. Each particle gets a random speed when it’s created, and then the CPU adds that speed to it every frame to make them move. This works pretty well for a few thousand particles, but after that things get slow.

In this case, you’re beholden to your browser’s ideas about what optimization means, and it’s generally tuned to quickly render mostly-static layouts and text (and rightly so). There are other tools available to us, though, like the <canvas> element in the following example. This gives us more direct control, so we can modify the pixels of a DOM element however we see fit, either directly with plain JavaScript, or accelerated using WebGL and a library like Three.JS.

See the Pen ThreeJS Particles Meshes by Upstatement (@Upstatement) on CodePen.

That's a fair bit faster, and can happily support about 10x as many particles before slowing down, but it’s only solving half the problem. By using Three.JS and WebGL, we get some more control over the rendering process, and as such don’t have to roll the dice on however the browser decides to optimize things. But, aside from using a <canvas> instead of <div>s, all of the particle motion is still being done in JavaScript, which means on your CPU. And, the real trouble is that the work on your graphics card still has to happen. At some point, rubber must meet road, and your giant screen with its millions of pixels will need updating. If you're figuring out all those updates on the CPU, you’re going to have to copy them all over to the GPU at some point anyway. One of the real performance bottlenecks is this duplication of work.

With a really big particle system, even just copying that data to VRAM is going to be too expensive to do at 60hz. Your CPU is busy doing other stuff, sure, but really it’s the spatial realities of the situation. Your CPU is over here, and your GPU is way over yonder. All that data is going to have to travel across your motherboard (that green field of chips and lines) to the graphics card every frame, and there’s a lot of traffic there! Network traffic, memory traffic, IO, disk access. It’s not a quiet road. If you want real speed and, say, a million-plus particles, we need to move the work closer to its destination. Code run on the graphics card runs physically close to the VRAM and video connector, and that matters! It has unfettered direct access to it on a private road, a 12-lane highway only it can use.

In addition to its many other advantages, our GPU is nestled right next to its own VRAM and the video output, and is tailor-made to spray pixels from its memory onto an HDMI monitor with a minimum of friction, fuss, or impact on external systems. Much like the C64’s shared memory model, once you copy something to VRAM, the GPU will dutifully re-paint it to the screen so your CPU can keep its other thousands of plates spinning.

See the Pen ThreeJS Particles Shader by Upstatement (@Upstatement) on CodePen.

In the above example, we happily animate millions of particles by moving all the code that does the work of updating particle positions to the GPU itself. Once you set things in motion, your CPU has no idea where particles are, or what is being displayed on the screen. It's delegated that to the GPU completely, in the form of shader code, which is compiled once in JavaScript and then sent off to the graphics card. From then on, instead of calculating and copying hundreds of thousands of particle locations every frame, it copies just one number: the number of seconds that have passed since the animation began. Then your GPU uses that number on its many cores to do all the motion work in-situ, without anybody else in the computer knowing about it.

The trick isn’t a stealthy way to copy data faster, or use fancier algorithms, math, or compression, but rather to avoid having to copy much of anything at all.

Now, you don’t always need to use this kind of technique. If you're animating dozens or even a few hundred things, often your CPU is more than capable. But for situations where you want animations to either be very performant/efficient or extremely large, structuring your code with the geography of the situation in mind is often the only way.

Using this approach needn't be limited to updating something’s position. If you find yourself running up against the limits of what background-filtering and browser transforms can do, you might enjoy experimenting with some of these tools. These same techniques can be used for post-processing effects on images and videos that your CPU won’t even know are happening. The Book of Shaders is a great place to learn, and check out Shadertoy for inspiration (if not terribly understandable code).

And if you do get a chance to poke around in someone's Commodore, try not to lose any screws.