Mode collapse is the way AI tends to give bland, boring responses, and it's becoming a big problem as more businesses try to use AI as a push-button idea box. Our CEO Mike Swartz and CTO Mike Burns talked about some workarounds. (Claude came up with the title 🥀)

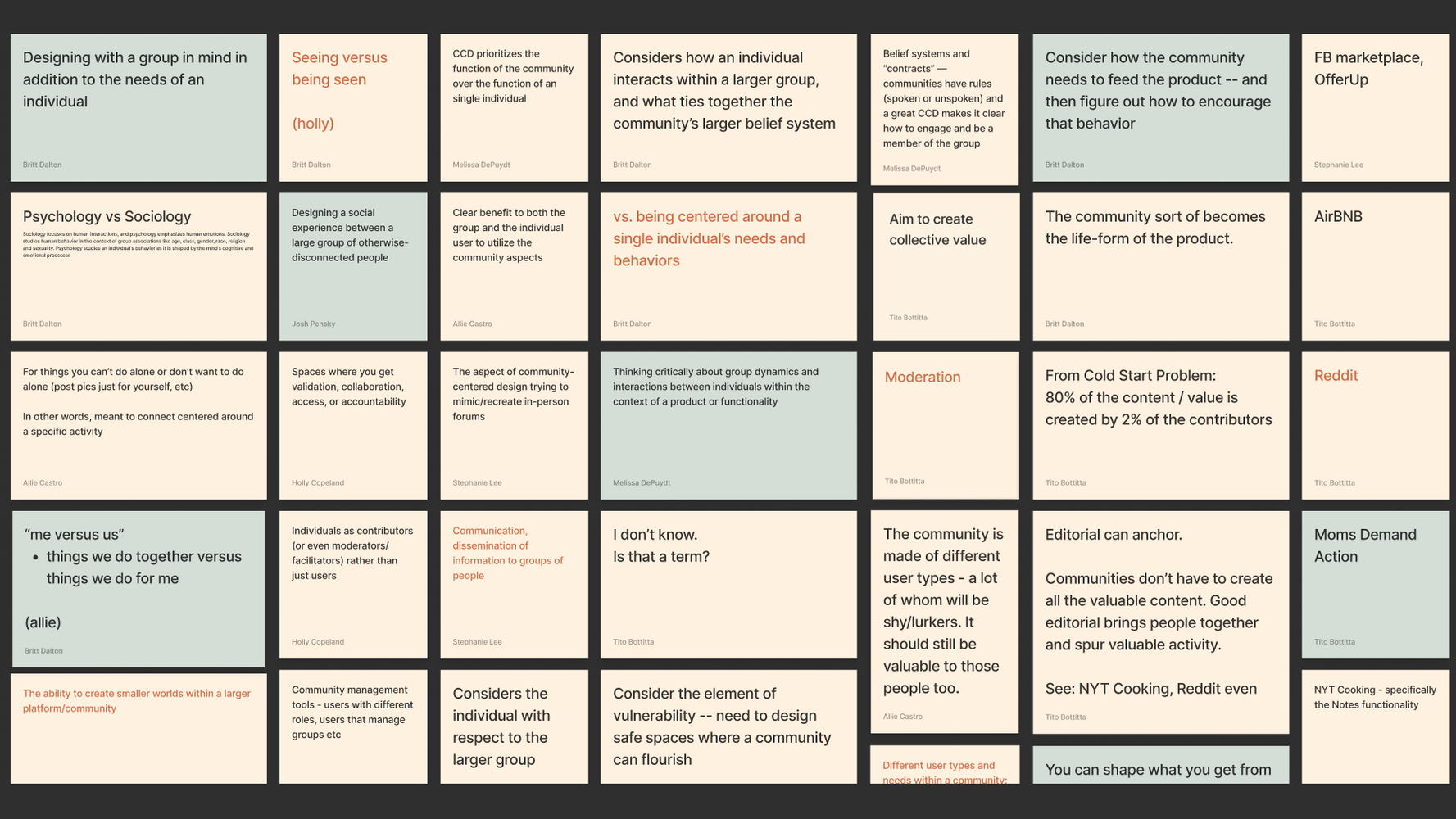

Mike Swartz: Models can be powerful tools for us and our clients to use, but often our clients have no idea what to ask these things, and by default the underlying tech can be very basic and deliver results below our level of taste if we're not careful. So I want to explore its limits and think of defaults and best practices for us, but also to bake into user interfaces handed off to people who definitely, never, ever, will read all the type of shit I wrote here.

The phenomenon of mode collapse in generative output is the tendency of a model to collapse to common or accepted outputs based on training data or RLHF fine tuning, plus safety guardrails in the system prompt. There are a few ways to mitigate this that we don't have access to, like increasing the temperature (randomness) of statistical selections, changing the training set, or guiding the reinforcement learning process toward certain outcomes.

BUT there are some things you can do, and I tried a few of them here to see how the output in a past brand naming exercise changed: divergent/convergent thinking and a CoT pause before return, persona injection to find more statistical weight around creative territories (I gave it Virgil Abloh and Errolson Hugh) [and this title came from a Jacqueline Novak persona] and also ask it do a guardrail dump (essentially when it approaches its limits based on the collapse conditions, tell me what triggered them).

Takeaways are:

- It considered many more words (10x in Claude’s case) than with no prompt engineering or expansion

- It accepted more unexpected results

- It was more aesthetically aligned with the brief and culture (persona injection not only brings in certain likely construction types but mountains of probability around them, so if the personas are relevant to a certain culture that culture gets pulled in, like streetwear or cyberpunk here)

- The entire instance of the model was more thoughtful and provided better human-in-the-loop information to build on brainstorming and collaboration by showing me its territories and adjacencies

- The names in the expanded sets were much closer to the one our client chose

- It thought of some things I wish we’d thought of in that past naming project

- When we voted on the names, the most votes were give to Claude w/ diversity expansion, but ChatGPT o3 w/o expansion got the second most

Mike Burns: I do wonder how much it's giving you accurate/truthful information with the meta questions (guardrail dump) vs. making up stuff that sounds like what you want

I guess it doesn't really matter, in a sense.

Mike Swartz: I totally agree and wish I knew—but in asking it stuff in that way over the last week I've gotten it to give me pretty wild shit. This is really emerging as a hard-to-solve problem at the platform and UI level as more businesses adopt AI and use it like a push-button idea box. The ideas that pop out by default are pretty dopey and many struggle to go further.

Mike Burns: Totally. I will say, my engineering/UNIX-philosophy-brainwashed brain does wonder how much of this problem needs to (or should) be solved at the foundation model layer. The biggest innovations that have made AI useful for me have all involved downstream tools for context management. I don't think I'll ever trust a foundation model without application layer solutions. This definitely makes me want to explore this space more.

Mike Swartz: I actually think it's a little of both, it's easiest to start at the application layer and for most use cases would be sufficient. But for hyper-specific cases, foundation layers need to be considered. It's not just text. Mode collapse can prevent certain conditions from being found in automated radiology settings because it wants to look for what's common (like real people) and throws away edge cases. But if you access through API or use open models you can change temp and remove guardrails.

Mike Burns: Fair. Fine-tuning is another solution. But none of the solutions at the foundation model layer will reliably "solve" for indeterminacy. (Even setting temp to 0 will generate different responses for the same prompt). There's always a dice roll/YMMV effect. Which is a feature, in some applications. But a critical bug in others.

I haven't wrapped my brain around the best way to improve the taste of an LLM. The first thing that comes to mind is the difference between RLHF (telling LLMS what you like/don't like) and Constitutional AI (embedding a value system into the training process, as Anthropic does with Claude). The latter is far more intuitive to me. Might be why Claude did better in your test.

Mike Swartz: My gut on the taste equation is that like with humans—you can't teach it. But you CAN find proxies for it, and that's where I like persona injection to move the weights towards more interesting portions of the likely responses. Like when you’re vibe coding and tell it you’re John Carmack and it works better lol

Mike Burns: That sounds right, and is also just, crazy. My first thought is—why don't you assume I always want to program like John Carmack? How spoiled we have become.