How Upstatement is working with generative AI to hit new creative highs.

Upstatement was born in the early days of the mobile revolution. In 2011, we launched one of the first major responsive websites for the Boston Globe, and in the years that followed, the way we made things didn’t change all that much. People still wrote all the code and copy, and manually created art like photos and graphics. But now AI is here.

You might be oversaturated by all the AI talk out there, but it’s not going away. AI has changed the way we work, and will keep changing it. While we certainly don’t have all the answers about where things will land, we do have experience and ideas to share as we all navigate the opportunities and ethical dilemmas associated with AI together.

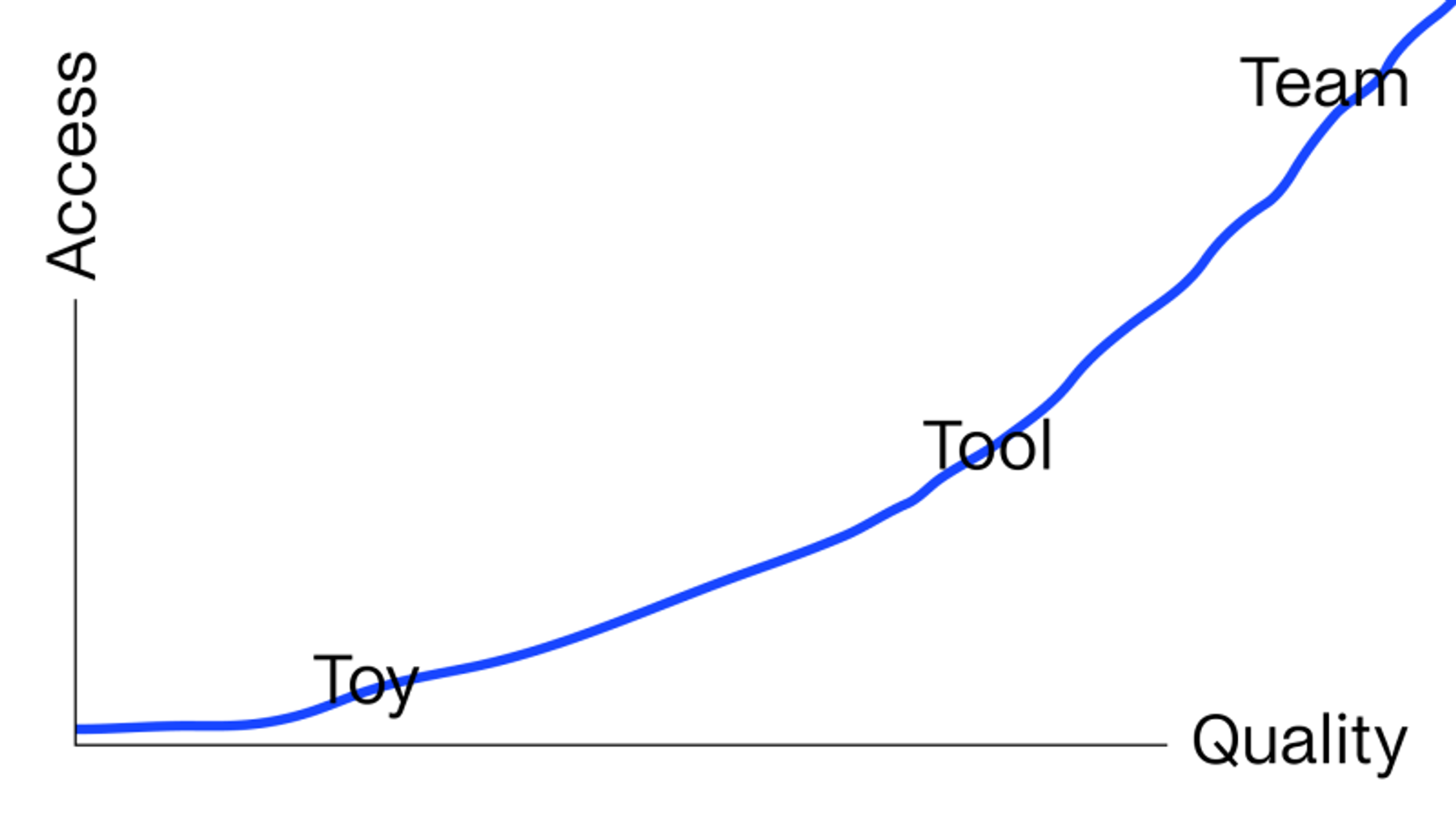

From Toy → Tool → Team

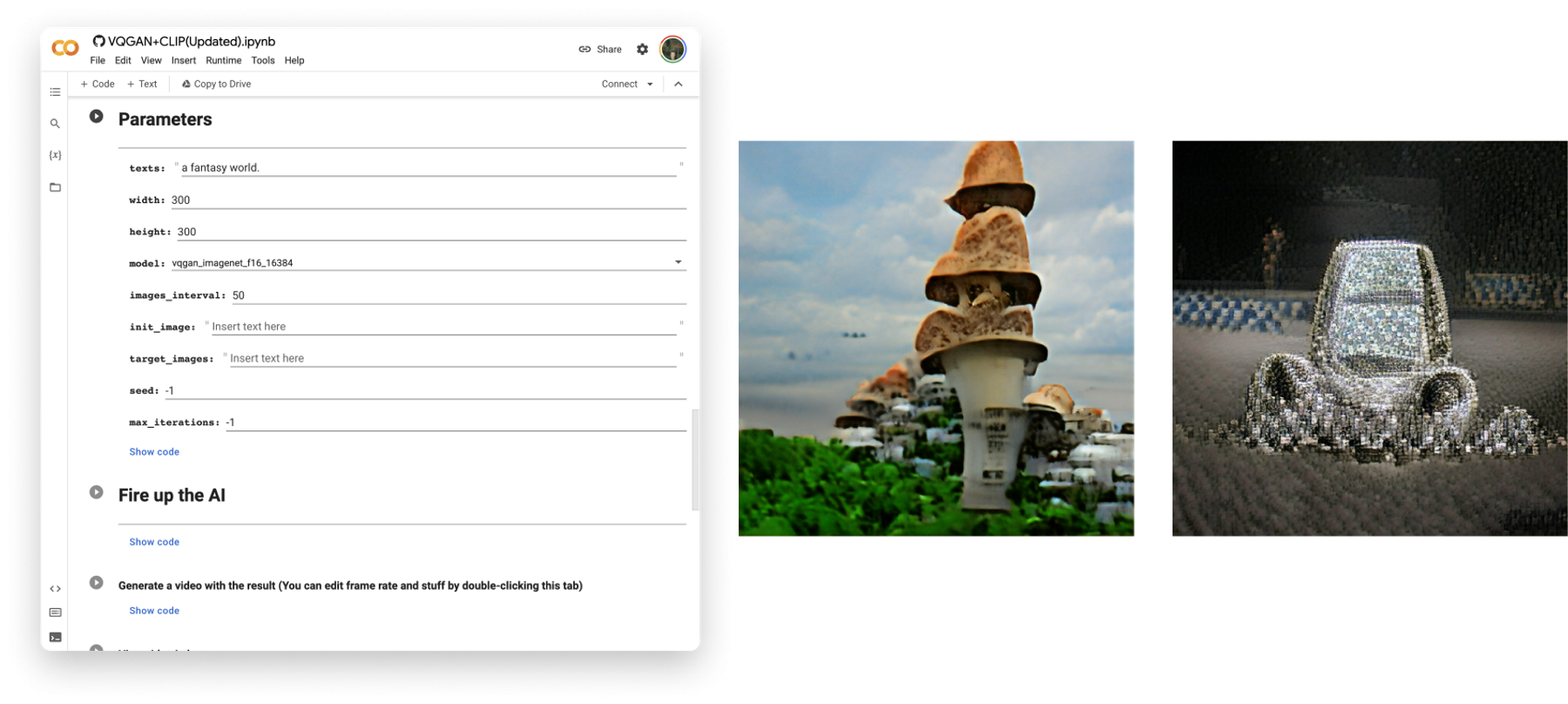

When Open AI first became available in beta, you basically had to write code and rent computing power to use it. In the Toy stage, open-source experiments were available to play with, but the output was slow and…very strange. Though we couldn’t really use it as professionals, the idea that images could be generated from text was exciting.

By 2022, an explosion of new paid services like Dall•E, Stable Diffusion, and Midjourney came onto the scene and started to feel like usable Tools. You could enter prompts in a web browser and see higher-quality results in seconds.

With access to and the quality of GenAI text-to-image products expanding so quickly, we experimented obsessively. These services reward persistent trial and error; we ran short, simple prompts; long, detailed prompts; image prompts; and even prompts generated by ChatGPT, then compared outputs.

Most of these products are just command line interfaces (CLIs) to a model powered by Open AI. For example, Midjourney uses Discord as its UI and, while awkward, this emphasizes the fact that you’re in a dialogue—literally using a chat app—with a network of computers that predicts and paints pixels. You ask for something and never quite know what you’ll get. Ask for the same thing ten times and you may get totally different results. Pick one that seems interesting, and remix it. You might lose hours going back and forth, generating hundreds of options. And then, you start to design around them. This is the moment that GenAI started to feel like an extension of our design team.

AI Art for Client: Earth Alliance

In the summer of 2022, we began working with a startup philanthropy with a limited budget but big aspirations. They set out to collaborate with creatives and cultural influencers to introduce new narratives around climate change, asking us to create a brand that invited audiences to reimagine what the world would look like if everyone came together to solve the crisis. Since they hadn’t made any content yet, we had to be inventive. This seemed like a perfect use case for AI art: prototype a wide range of possibilities to set up a series of climate collaborations.

We generated hundreds of images of people, paintings, places, and objects to express the nascent brand. We considered these placeholders at first (similar to the way a designer might “swipe” an illustration or photo to demo a design language). As we built a library of visual experiments, we also talked about the inherent contradictions of using this technology as professional designers. Concerns about copyright, energy consumption, and the impact on human jobs led us to develop a kind of rubric for each use case.

AI Art Considerations Framework

- Context: How will I use the art?

- Concept: What prompt will I start with?

- Iteration: Where will it take me?

- Efficiency: What’s the fastest way to get there?

- Quality: Which model will generate the best result?

- Copyright: Can the art be original — not derivative?

- Terms: Can I actually use it?

- Impact: How will this impact human creators?

It turns out, there are things that AI can generate that you just wouldn’t get from a typical art commission. Can you imagine asking a human artist to “try 100 different ways to make an elaborate oil painting in the style of [a 17th-century painter]”? The whole process of moving from a blank canvas to a stack of wildly interesting visual concepts is fast-paced and inspiring.

Delicious Nuggets, an editorial product that we built for Earth Alliance, is a set of resources and inspiration for digital and social media creators. When we designed this greenwashing piece, “How Not to Get Canceled,” we needed to feature some villains. We set up a prompt like we would a photo shoot, then remixed the best results to make each one a different person.

How long it takes to make something decent varies. Sometimes it's almost immediate. And sometimes you can iterate for days and never get anything quite right. But on average it's been taking our team about thirty minutes. If it’s photographic and kind of generic, either a landscape or a person, it’s super fast. If it’s conceptual and highly styled, it takes more trial and error and you can be taken somewhere unexpected. Fast might be: a blue sky with chemtrails. Slow might be: a purple sky with a cloud shaped like a hand. If the AI has to invent something unlike anything it’s been trained on, it’s unpredictable.

GenAI as Image Processor

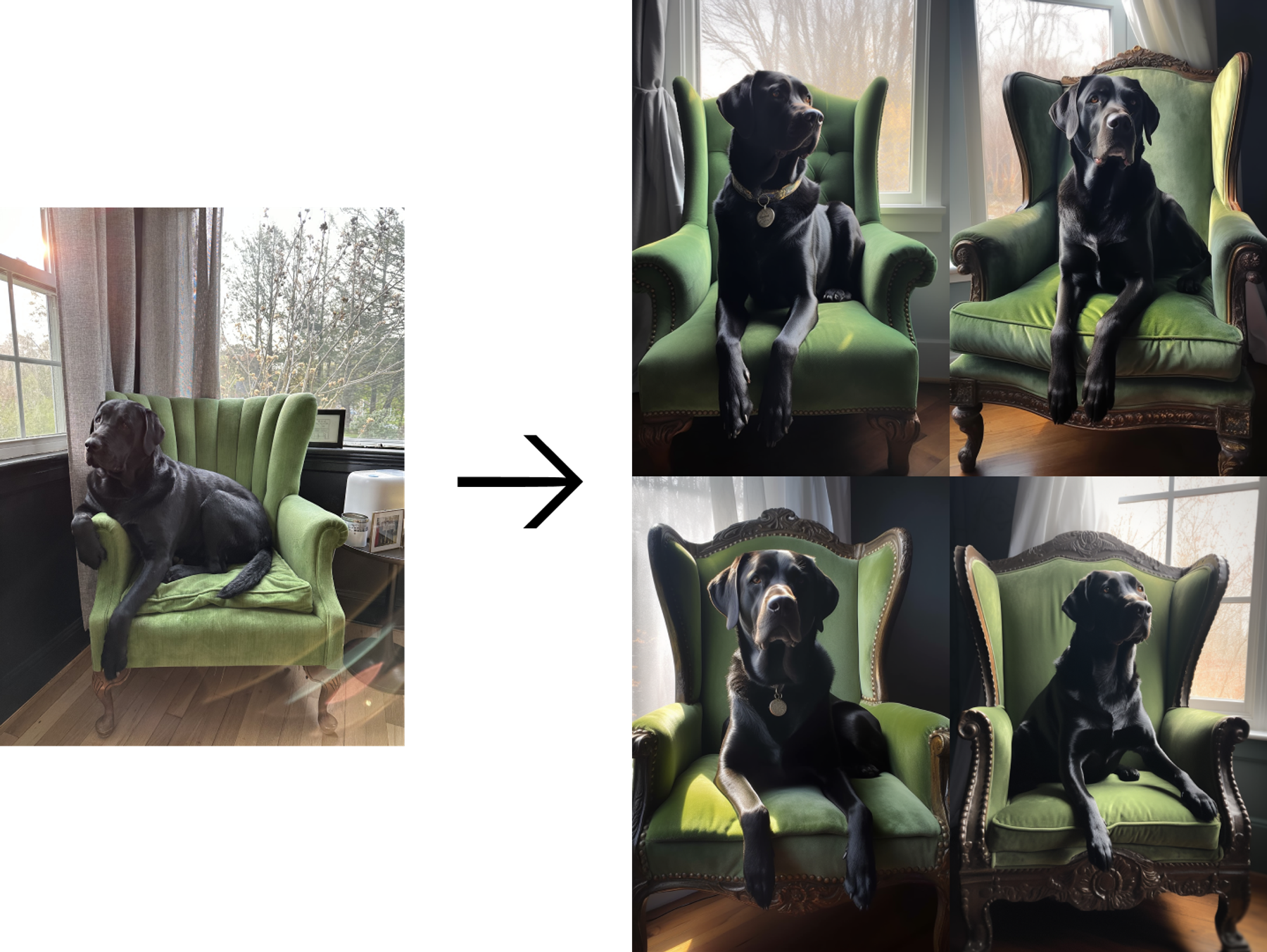

Some text-to-image platforms respond well to image prompts. Each model is different, but in something like Midjourney, the results of a phone photo, or even a quick pencil sketch, processed through the app can enhance the original in unexpected ways. We like this approach because it gives us more control and provides a way to “train” the model using something we made.

Standalone tools are also rapidly improving. AI upscaling services can take a relatively small image and blow it up to 800 percent. While this may introduce some strange artifacts, it makes the output much higher resolution. Other tools can remove a photo’s background, providing you with a transparent PNG in seconds.

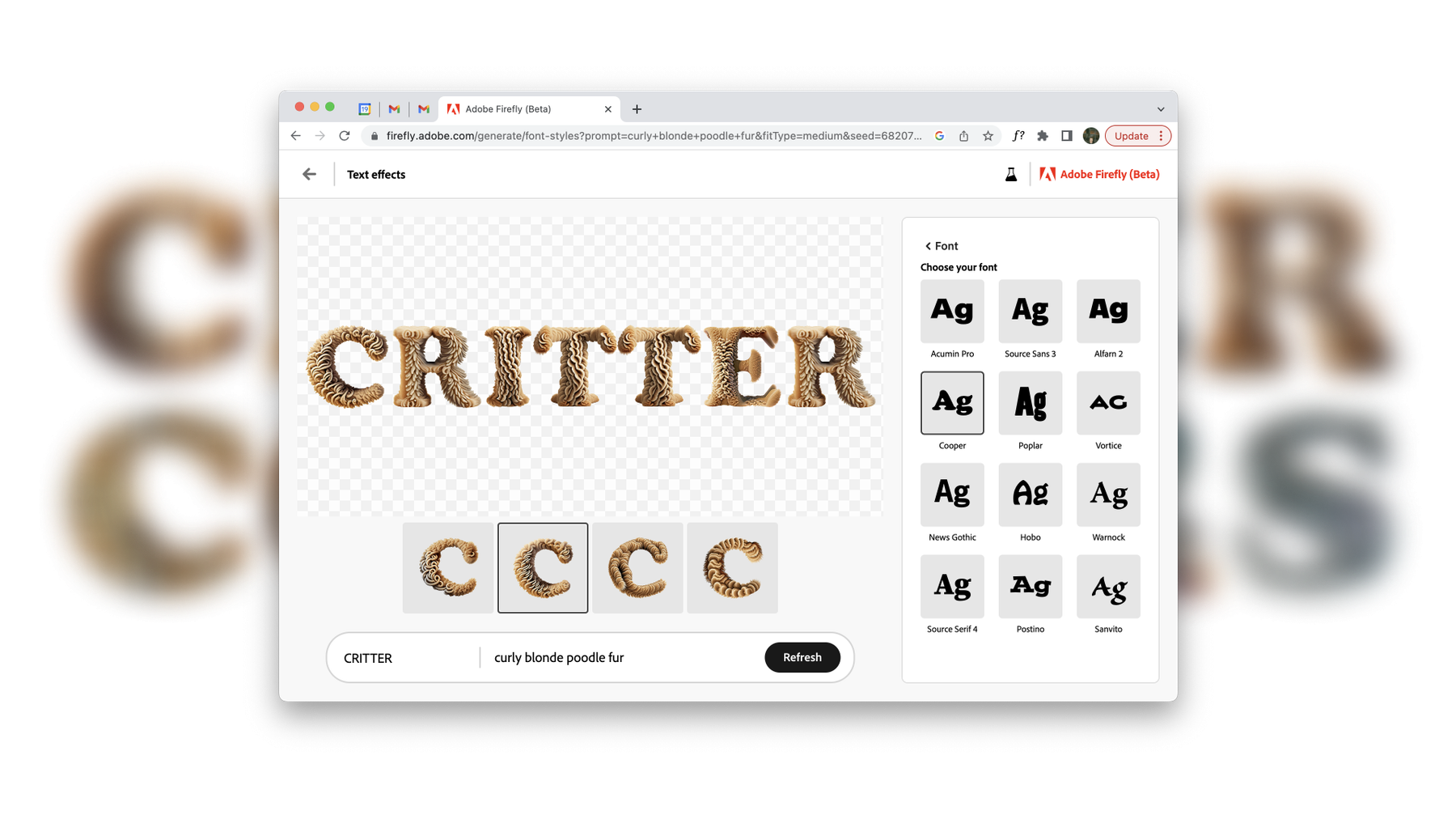

Adobe just introduced a beta version of Firefly. Some of its early features include GenAI type, vector recoloring, and even a new generative fill feature in Photoshop. It's working to solve a historic gap in GenAI: typography. Most services can’t even spell. Adobe also offers an indemnity clause for users stating that Adobe will pay any copyright claims related to works made in the company’s generative AI art creation tool, which previously had been a big barrier to AI use in client work.

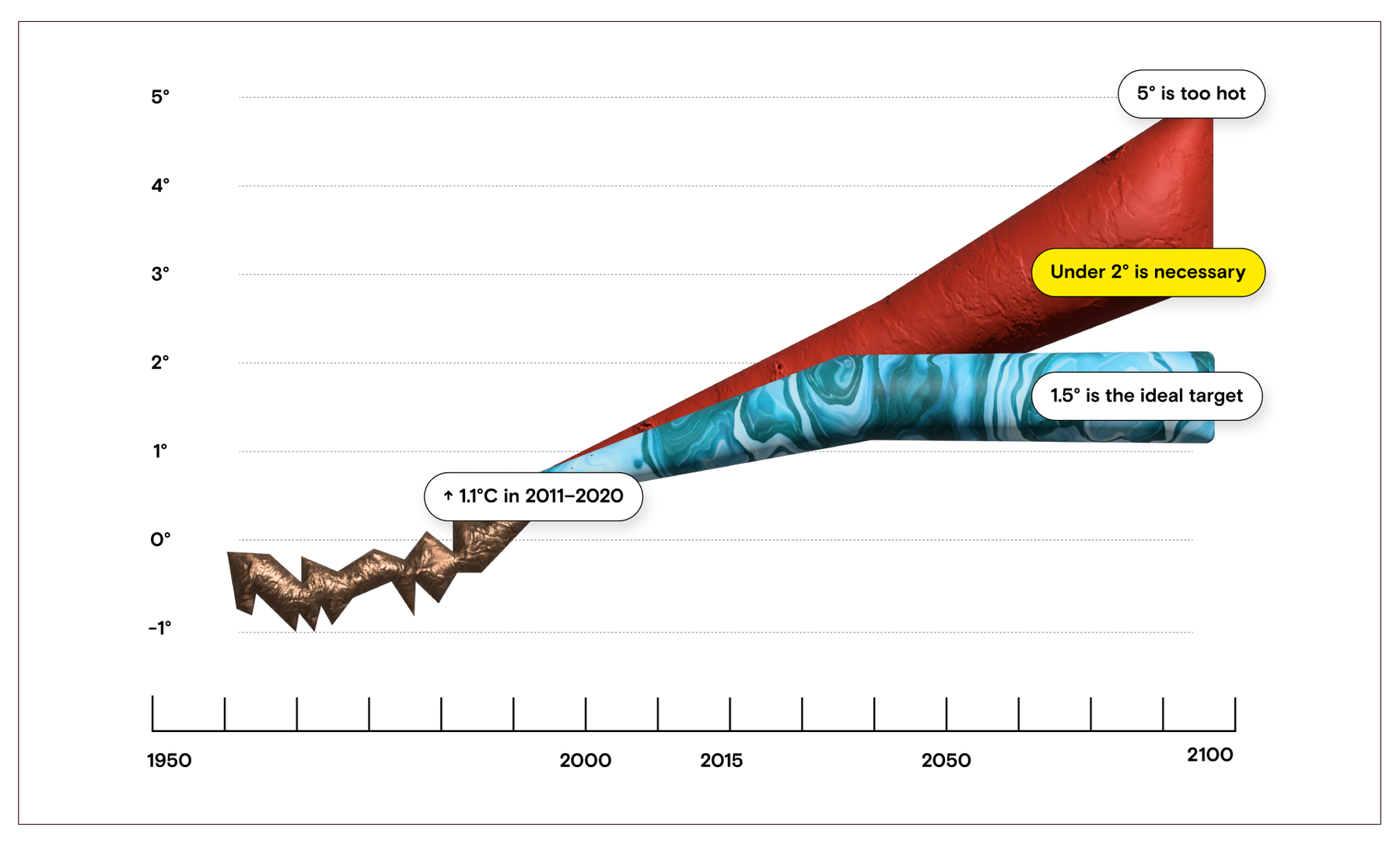

Spline 3D has a feature that generates AI materials and applies them to objects. We’ve used this for data viz and promo posters.

Sometimes the prototyping process is a way to test the limits of each tool. And sometimes it’s just funny.

Generating the Brief

GenAI art can also be used to inform a human commission. During our (currently in-progress) brand design work for a university, we explored new mascots, then designed around dozens of potential rendering styles and poses ahead of hiring one of our favorite illustrators, Jared Tuttle. He found it helpful to see how we planned to build an identity system around the art, and it shortened the distance for us to get it right.

The Future Is…

We don’t know where any of this will go from here, but the past year has already proven AI’s potential for us. Our process has become simultaneously shorter and more expansive. Despite the big questions around ownership and impact that remain, we’ve seen how the dialog between humans and AI can be an opportunity for users to serve as ethical guides. As powerful as this technology is, it’s not something we entrust to tell the difference between right and wrong. AI may help us be even more creative and productive, but at the end of the day, it can’t see nuance.

It just predicts pixels.