Upstatement has been exploring different AI applications as part of their Discovery AI program, developing an AI art direction practice and building an AI chatbot for MIT. I joined them as an AI experience research engineer to continue this work. I’m here to see what’s possible, what will be possible very soon, and maybe most importantly, what’s useful for real-world client applications. Upstatement collaborates with people across industries—a client might be an editor, a product manager, a tech founder, or an admissions officer. What problems do they have that we could solve using LLMs? What do their users want?

Our approach to this giant question looks like a lot of Figjams, prototyping, and talking to all kinds of specialists. Follow along as I research and build alongside Upstatement.

Here’s where we are so far.

Spark the right ideas and build

I started having conversations internally and facilitated brainstorms to gather (1) inspiring AI tools, (2) ideas for AI-powered features, (3) common client problems that could be addressed, and (4) important values in this initiative. With AI, there’s so much that’s possible; we generated ideas around shaping the future of websites, personal AI assistants, and tools for reading and writing. But having experts in the processes of design, product, brand, editorial, and art direction with deep insights on client problems helped focus and ground the ideas that came up.

For instance, you can have an idea to fine-tune writing to match a particular voice. But it’s far more compelling to frame that idea as a solution to a real-life problem. Ally Fouts, leader of Upstatement’s brand practice, shared a possible use case. When creating a set of brand guidelines, implementation becomes much smoother if you can include plenty of sample copy that fits the guidelines. Could you use an LLM to help generate copy that fit the guidelines, and therefore helps preserve brand integrity and sustainability long-term?

It was time to take these ideas as inspiration for building quick prototypes. While iterating, we could continue to home in on big ideas, learning more about real-world client needs in order to better connect the prototyping to those scenarios. Brand communications director Molly Butterfoss and I sketched out storytelling approaches, and I began posting a series of videos as part of Discovery AI—Upstatement’s ongoing exploration of the future of websites.

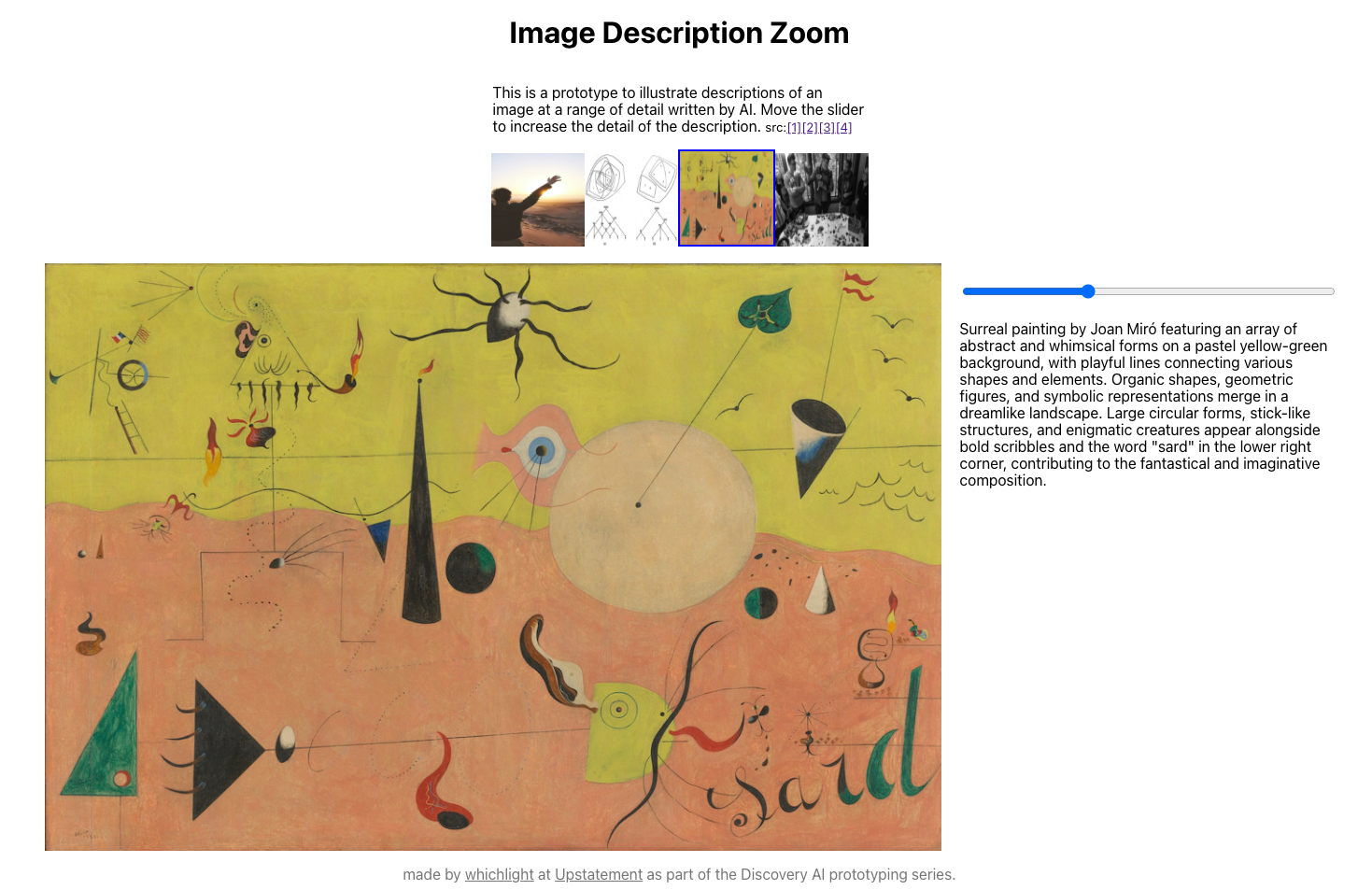

Prototype 1: Turn images into words

During the brainstorm, Gino Jacob, an Upstatement engineer, shared an idea around an image alt txt generator for CMSs. Alt text is often overlooked, but it’s valuable.

Recently, OpenAI released GPT-4V, a powerful LLM that can perform image-based reasoning, taking an image input as well as a prompt. We realized we could use this to help with image alt text! While testing out different prompts for alt text, I realized that the range of extractable information in an image was compelling in and of itself. This wouldn’t have been possible before GPT-4V.

I created several prompts that would describe an image with increasing levels of detail, and used these prompts with a range of images: a photo, a diagram, and a painting.

You can explore the prototype here and see the demo video here. Use the slider to increase the level of text detail. The short description is useful for a quick overview of the image, and the detailed one gives an idea of not only the content, but also the form, in some cases putting words to visual qualities.

The descriptions not only get into what’s happening in the image, they also interpret aspects of the layout and the form, and even the artistic style. You can imagine using this to find words for techniques and styles you find compelling.

Note that if you take a look at the image of the mushroom foragers, you’ll find a few hallucinations in the detailed description. For instance, the person in the center is holding a mushroom, not a magnifying glass. To be honest, it is a bit hard to tell even with a human eye, and it’s interesting to see the LLM’s confidence. Future explorations could explore conveying confidence level more clearly.

If you can automate these more detailed descriptions, it opens up possibilities like embedding them for improved image search. Scott Dasse, the chief design officer at Upstatement, says that when it comes to art, clients generally have one of two problems: either they don’t have enough media, or they have too much. So if a client has a ton of media, embedding the image descriptions can help parse through the archive to find relevant ones (using info from content and form) given a prompt, or article, or some other description.

On the other hand, if they don’t have enough media, this method can help an LLM understand the right style of images in order to generate more. You could create a moodboard with a client and have the LLM put words to the style across the board, and use this in image generation.

What other possibilities do you see when you can automatically generate words to describe an image?

Prompt engineering tips—on iteratively improving prompts

Prompt engineering was key for the demo, and to come up with the different prompts I used an iterative technique to have the LLM reflect on its own output and improve on it:

- I asked for a prompt to generate alt-txt.

- I asked chatGPT to give me guidelines for good alt text.

- I asked to look at the prompt and the guidelines and see what is missing. It shared how the prompt could be improved and gives the updated prompt.

- I repeated 3 over and over. It improved the prompt until it deemed the prompt captured the guidelines affectively.

- The final prompt is the iteratively improved prompt.

I used this method for the most efficient way to describe the image, and also the more detailed description.

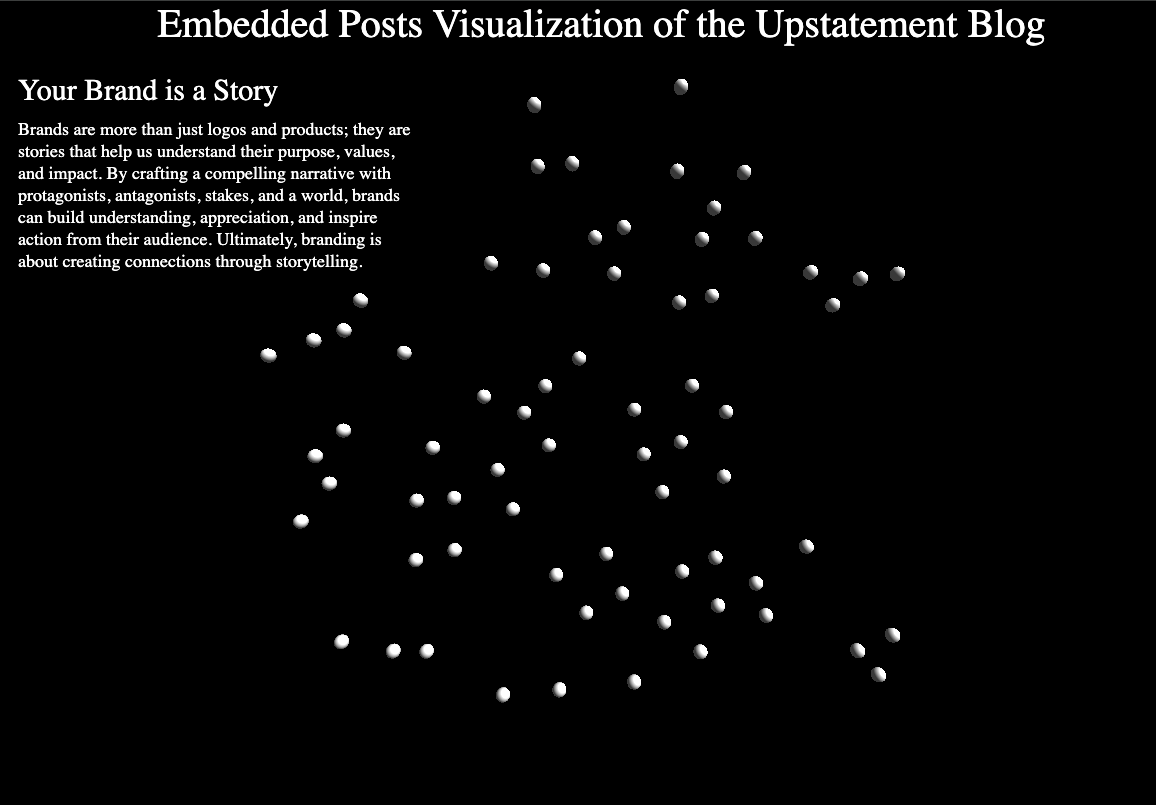

Prototype 2: Spatially browse a website

When you land on a website, it can be hard to get an idea of how big it is and what you can expect to find on it. If you were taking a trip somewhere, you could get a sense of scope by looking at a map and browsing points of interest. While you wouldn’t know all the details, you could get an overview to help you orient and understand where one place is in relation to another.

Embeddings are a key building block of machine learning. They’re a vector representation of some media, be it text or an image. Think of it as how the computer sees the text you give it. What’s interesting about embeddings is that things that are semantically similar will be closer together in the embeddings space.

Embeddings could be a way to create a map of a website. In this next demo I took posts from the Upstatement blog and used the post embeddings to create a visualization where similar posts are closer together. I took the embeddings and then used t-SNE to reduce the vectors to two dimensions, which would allow me to plot them.

I could hover over a node to see the title, and then click it to read the posts. I wanted to know more about the posts before clicking, so I generated summaries of each of the posts. That way when I hovered over a node, I could read a summary before clicking in.

You can imagine using this to find new ways to organize websites, or even dynamically adjust them based on the reader. Using space can be an intuitive way to explore and understand what’s on a site holistically.

You can watch the demo here—what are other possibilities you see with spatial browsing, or using LLMs to navigate sites? Would love to hear what you think!

Prototype 3: AI-generated topics and categories for a website

After the spatial visualization demo, I wanted to give a better idea of what each post is about, and what the blog covered as a whole. What if I could generate additional meta information about the posts?

Using the generated summaries of all of the posts, I asked chatGPT give me a list of 20 topics, and then 5 categories that would be good for a blog. Then, given the post text, I used the LLM to select a single category for each post and then tag posts with topics ordered by relevance. The category would be used to determine the node color, which is why I wanted one unique category for each post. And then the topics could be fuzzier; a post could easily be in multiple topics.

The automatic categories and topics gives a way to group posts, and also create better post previews, which can increases engagement and recirculation. This is useful for sites that publish often, like a news platform, and information-dense sites, like university websites. You can imagine connecting this with audience stats to get an idea of which topics are most interesting for your readership.

You can watch the demo video here or check out the prototype here. This is one way to use AI to help with publishing and organizing information on websites. What else comes to mind?

Prompt engineering tips—automatic machine labeling

Use this for the topic tags; this gave 20 labels: Take a look at these summaries. Give me the key topics that these summaries are about. Name them as if they are categories in a blog.

Use this for the single category label; this gave me 5 labels: Now take a look at the summaries and pick only five key topics that they are about. Name them as if they are categories in a blog.

Then for the actual labeling of categories use this prompt with the json response format parameter with the chatGPT API: Take a look at the text and categories and give me the category that applies most to the text. Return as json with the name categories and a single entry being the name of the category.

And for the labeling of topics: Take a look at the text and categories and give me the category or categories that apply to the text. Order them by most relevant. Return as a json array with the name categories.

What’s next: smart navigation

As far as we’ve come with websites, people still struggle to find what they’re looking for online. This is obviously even more challenging on information-dense, complex websites, like a university website or news platform. What if you don’t know exactly what to search for? Or what category in the navigation what you need falls under? There are use cases that fall through the cracks, that are overlooked, that may create forks in the road and cause massive frustration.

There’s emerging and improved tooling in generative AI. It’s helping to drive exciting products like Arc Search, and there are huge opportunities for rethinking search and navigation. We’re currently digging into a client use case—stay tuned for what’s next in Discovery AI! You can follow along on Instagram and LinkedIn.

Discovery AI is a series exploring the future of websites. ✨🚀🌐